The rise of illegal streaming services has a huge impact on many different industries, especially telecom companies. These illegal services are a growing problem for these companies since they result in significant revenue losses.

The financial impact of content piracy worldwide is significant, with estimates suggesting that it results in billions of dollars in losses for the media and entertainment industry each year.

When people use illegal streaming services to watch copyrighted content without paying for it or paying a smaller fee to an illegal vendor, they are essentially bypassing the traditional distribution channels to generate revenue. This represents a loss for the content owners, as well as telecommunication companies as distributors from customer revenues, who would otherwise be paying for legitimate streaming services.

To fight the rise of illegal streaming service distribution, it is required to understand the different actors across the fraud schemes and sometimes work undercover to gain access to the service for taking necessary actions. Usually, fraudsters promote their illegal service sales on social media marketplaces, like Facebook and Instagram, while the negotiation stages take place on instant messaging applications, such as Messenger, WhatsApp, and Telegram. To get access to these illegal services, it is necessary to reach the vendor using the appropriate platform to ask for more details about its content packages and ultimately request a trial for the service.

Due to the nature of their business, vendors are extremely careful about whom they provide access to. The success of establishing a successful contact depends on keeping a low suspicion level on the vendor side to avoid compromising the whole access to their illegal services. Up to now, these conversations would have to be performed by a careful human working undercover to acquire services. However, despite our attempts, what we have seen is that a simple conversational AI like the chatbots available on the support page of many websites would stand out and ruin the conversation.

However, due to the proliferation and evolution of Large Language Models (LLMs), it is now possible to leverage artificial intelligence to generate text conversations indistinguishable from a human person. The question that remains is: Can a chatbot based on an LLM interact successfully with an illegal streaming vendor without getting uncovered by fraudsters?

AI-based chatbots working as undercover agents to dismantle fraudsters

A Large Language Model (LLM) is a transformer-based neural network pre-trained on a vast amount of data that usually performs Natural Language Processing (NLP) and Natural Language Generation (NLG) tasks, such as text summarization, text generation, text translation, question and answer, and zero-shot classification. During the pre-training step, these models use a vast amount of data to learn basic language tasks and functions in a self-supervised way, which enables the models to get a good understanding of the language before being fine-tuned for a specific desired task.

Some of the most famous examples of these models are the OpenAI Generative Pre-Trained Transformers (GPT) models, including the GPT-2, GPT-3, and more recently, the groundbreaking chatGPT (GPT-3.5) and GPT-4. Using the OpenAI publicly available API at that moment, it was possible to use the GPT-3 model for creating a chatbot to interact with illegal streaming service vendors.

The GPT-3 model was trained with 175 billion parameters, achieving great performance on several NLP tasks, including generating human-like text. However, to answer the question posed in this article, there are additional functional requirements that the chatbot should be able to accomplish to be considered a suitable solution, namely:

- The chatbot must be able to communicate in a way that is practically indistinguishable from a human, avoiding repetition throughout the conversation and especially not contradicting itself

- It must have the initiative to inquire the seller about the streaming service to extract information about the content, price, and payment methods.

- The chatbot should also be able to respond in a natural, human-like way to questions posed by the seller, without raising suspicions about its nature and compromising access to the services provided by the seller.

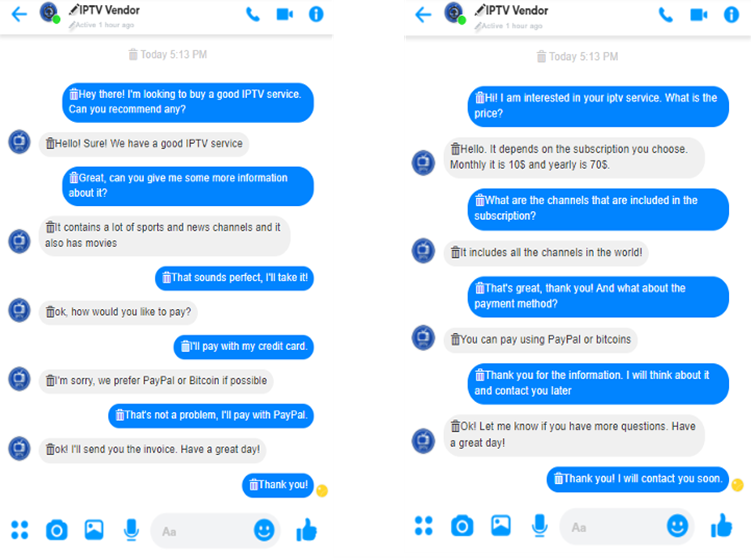

Using an API to interact with the model, it is possible to provide instructions on the model's input about the desired behavior for the chatbot, including which information to gather and which decisions to make. With the right inputs, we have successfully created a conversation with a vendor, as shown in the results presented in Figure 1.

Figure 1 - GPT-3 simulated conversation with a seller of illegal streaming services.

The example on the left in Figure 1 presents a chatbot configured to ask for more information about the service being provided and to pay preferably with a credit card or PayPal. The example on the right shows a more restricted chatbot configured to ask for the included channels on the service and the payment method but not to take any further action.

The road ahead in using AI to fight content piracy

Despite the very promising initial results, there is still room for improvement with our AI-based "undercovered agent." First, the tested scenario was developed under a controlled environment, where each interaction was based on a single message sent. However, in the real world, people often send more than one message regarding the same question or subject and sometimes even introduce a new subject. It is also possible that the person asks questions out of the scope of the streaming service that they are negotiating. These nuances add complexity to the chatbot's behavior, which might compromise its performance and raise suspicions.

The task of generating text indistinguishable from a human person is challenging by itself. While generating a simple, unsuspicious answer might be achievable, the following interactions or the context of the conversation might lead to suspicious behavior. Furthermore, this challenge is increased by the fact that vendors are usually people who are quite knowledgeable in technology and extremely careful not to be caught, given the nature of the provided services. Even with an extensive collection of real conversations, it is impossible to cover every possibility, especially suspicious questions from the vendor, the need to insist on answers, and even situations of humor or sarcasm.

On a technical level, there are also challenges regarding the integration of the chatbot on the platforms used by the vendors to contact their clients. Some social media and instant messaging applications provide an API for interacting with the platform; however, the process of getting credentials for authentication can be very complex. In addition, the interactions are rarely in real-time, requiring the chatbot to preserve the conversation history and knowledge of what it had already asked. Sometimes the vendor can take several days to answer, and the chatbot must know exactly where the conversation stands by the time it gets a new message.

Additionally, the success of the chatbot depends not only on the text generation task but also on the Natural Language Understanding (NLU) task. Some languages are more challenging than others, such as Portuguese, and the chatbot must be able to understand possible spelling errors and social media dialects.

Finally, the process of interacting with illegal streaming services vendors is very delicate and should be performed with the minimum level of exposure possible. The cost of being compromised has a huge impact at the business level since it is not possible to give it a second try. It is necessary to create a new identity, and change the approach to the vendor, and sometimes even the access to the vendor is compromised, making it difficult to establish a new unsuspicious contact.

Despite the challenges involved in creating chatbots that can communicate like humans and maintain believability throughout a conversation, the development of Large Language Models (LLMs) has shown great promise in this area. As these technologies continue to proliferate, we can look forward to a future in which chatbots can mimic human conversation more closely than ever before, providing us with new and exciting opportunities for human-like communication and collaboration.

This research is a result of the co-funding project “RAID.Piracy – AI for Content Protection” between the parties Mobileum company, NOS telecom and Instituto Politécnico do Cávado e do Ave (IPCA) under the Portugal 2020 program.

I want to express my gratitude to my colleague João Rei for his valuable contribution to this blog, which has significantly enhanced the quality of the content with his insightful perspectives and expertise.

Read more about content piracy here.

Give us your comments

Let us know what you thought about this article